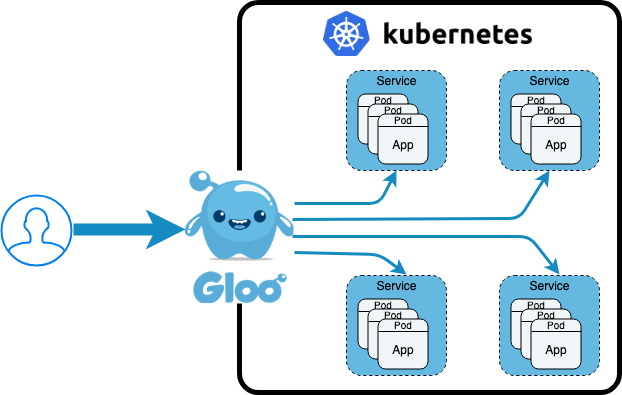

Kubernetes is excellent and makes it easier to create and manage highly distributed applications. A challenge then is how do you share your great Kubernetes hosted applications with the rest of the world. Many lean towards Kubernetes Ingress objects and this article will show you how to use the open source Solo.io Gloo to fill this need.

Gloo is a function gateway that gives users many benefits including sophisticated function level routing, and extensive service discovery with the introspection of OpenAPI (Swagger) definitions, gRPC reflection, Lambda discovery and more. Gloo can act as an Ingress Controller, that is, by routing Kubernetes external traffic to Kubernetes cluster hosted services based on the path routing rules defined in an Ingress Object. I’m a big believer in showing technology through examples, so let’s quickly run through an example to show you what’s possible.

Prerequisites

This example assumes you’re running on a local minikube instance, and that you also have kubectl also running. You can run this same example on your favorite cloud provider managed Kubernetes cluster; you’d need to make a few tweaks. You’ll also need Gloo installed. Let’s use Homebrew to set all of this up for us, and then start minikube and install Gloo. It will take a few minutes to download and install everything to your local machine, and get everything started.

brew update

brew cask install minikube

brew install kubectl glooctl curl

minikube start

glooctl install ingress

One more thing before we dive into Ingress objects, let’s set up an example service deployed on Kubernetes that we can reference.

kubectl apply \

--filename https://raw.githubusercontent.com/solo-io/gloo/master/example/petstore/petstore.yaml

Setting up an Ingress to our example Petstore

Let’s set up an Ingress object that routes all HTTP traffic to our petstore service. To make this a little more exciting and challenging, and who doesn’t like a good tech challenge, let’s also configure a host domain, which will require a little extra curl magic to call correctly on our local Kubernetes cluster. The following Ingress definition will route all requests to http://gloo.example.com to our petstore service listening on port 8080 within our cluster. The petstore service provides some REST functions listening on the query path /api/pets that will return JSON for the inventory of pets in our (small) store.

If you are trying this example in a public cloud Kubernetes instance, you’ll most likely need to configure a Cloud Load Balancer. Make sure you configure that Load Balancer for the service/ingress-proxy running in the gloo-system namespace.

The important details of our example Ingress definition are:

- Annotation

kubernetes.io/ingress.class: gloowhich is the standard way to mark an Ingress object as handled by a specific Ingress controller, i.e., Gloo. This requirement will go away soon as we add the ability for Gloo to be the cluster default Ingress controller - Path wildcard

/.*to indicate that all traffic tohttp://gloo.example.comis routed to our petstore service

cat <<EOF | kubectl apply --filename -

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: petstore-ingress

annotations:

kubernetes.io/ingress.class: gloo

spec:

rules:

- host: gloo.example.com

http:

paths:

- path: /.*

backend:

serviceName: petstore

servicePort: 8080

EOF

We can validate that Kubernetes created our Ingress correctly by the following command.

kubectl get ingress petstore-ingress

NAME HOSTS ADDRESS PORTS AGE

petstore-ingress gloo.example.com 80 14h

To test we’ll use curl to call our local cluster. Like I said earlier, by defining a host: gloo.example.com in our Ingress, we need to do a little more to call this without doing things with DNS or our local /etc/hosts file. I’m going to use the recent curl --connect-to options, and you can read more about that at the curl man pages.

The glooctl command-line tool helps us get the local host IP and port for the proxy with the glooctl proxy address --name <ingress name> --port http command. It returns the address (host IP:port) to the Gloo Ingress proxy that allows us external access to our local Kuberbetes cluster. If you are trying this example in a public cloud managed Kuberbetes, then most will handle the DNS mapping for your specified domain (that you should own), and the Gloo Ingress service, so in that case, you do NOT need the --connect-to magic, just curl http://gloo.example.com/api/pets should work.

curl --connect-to gloo.example.com:80:$(glooctl proxy address --name ingress-proxy --port http) \

http://gloo.example.com/api/pets

Which should return the following JSON

[{"id":1,"name":"Dog","status":"available"},{"id":2,"name":"Cat","status":"pending"}]

TLS Configuration

These days, most want to use TLS to secure your communications. Gloo Ingress can act as a TLS terminator, and we’ll quickly run through what that set up would look like.

Any Kubernetes Ingress doing TLS will need a Kubernetes TLS secret created, so let’s create a self-signed certificate we can use for our example gloo.example.com domain. The following two commands will produce a certificate and generate a TLS secret named my-tls-secret in minikube.

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout my_key.key -out my_cert.cert -subj "/CN=gloo.example.com/O=gloo.example.com"

kubectl create secret tls my-tls-secret --key my_key.key --cert my_cert.cert

Now let’s update our Ingress object with the needed TLS configuration. Important that the TLS host and the rules host match, and the secretName matches the name of the Kubernetes secret deployed previously.

cat <<EOF | kubectl apply --filename -

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: petstore-ingress

annotations:

kubernetes.io/ingress.class: gloo

spec:

tls:

- hosts:

- gloo.example.com

secretName: my-tls-secret

rules:

- host: gloo.example.com

http:

paths:

- path: /.*

backend:

serviceName: petstore

servicePort: 8080

EOF

If all went well, we should have changed our petstore to now be listening to https://gloo.example.com. Let’s try it, again using our curl magic, which we need to both resolve the host and port as well as to validate our certificate. Notice that we’re asking glooctl for --port https this time, and we’re curling https://gloo.example.com on port 443. We’ll also have curl validate our TLS certificate using curl --cacert <my_cert.cert> with the certificate we created and used in our Kubernetes secret.

curl --cacert my_cert.cert \

--connect-to gloo.example.com:443:$(glooctl proxy address --name ingress-proxy --port https) \

https://gloo.example.com/api/pets

Next Steps

This was a quick tour of how Gloo can act as your Kubernetes Ingress controller making very minimal changes to your existing Kubernetes manifests. Please try it out and let us know what you think at our community Slack channel.

If you’re interested in powering up you Gloo superpowers, try Gloo in gateway mode glooctl install gateway, which unlocks a set of Kubernetes CRDs (Custom Resources) that give you a more standard, and far more powerful, way of doing more advanced traffic shifting, rate limiting, and more without the annotation smell in your Kubernetes cluster. Check out these other articles for more details on Gloo’s extra powers.